J Clin Aesthet Dermatol. 2023;16(7):54–62.

J Clin Aesthet Dermatol. 2023;16(7):54–62.

by Zachary Hopkins, MD; Oscar Diaz, BS; Jessica Forbes Kaprive, DO; Ryan Carlisle, MD;

Christopher Moreno, MD; Kanthi Bommareddy, MD; Noareen Sheikh, BS; Zachary Frost, BS; Asfa Akhtar, DO; Aaron M. Secrest, MD, PhD

Drs. Hopkins and Secrest are with the Department of Dermatology at the University of Utah Health in Salt Lake City, Utah. Mr. Diaz is with the Nova Southeastern Dr. Kiran C. Patel College of Osteopathic Medicine in Davie, Florida. Dr. Kaprive is with the Department of Dermatology in HCA Lewisgale Montgomery at Virginia College of Medicine in Blacksburg, Virginia. Mr. Carlisle is with the School of Medicine at the University of Utah in Salt Lake City, Utah. Dr. Moreno is with the Department of Dermatology at the University of Arizona in Tucson, Arizona. Dr. Bommareddy is with University of Miami’s Miller School of Medicine at Holy Cross Hospital in Fort Lauderdale, Florida. Ms. Sheikh is with Nova Southeastern at the Dr. Kiran C. Patel College of Osteopathic Medicine in Davie, Florida. Mr. Frost is with the Noorda College of Osteopathic Medicine in Provo, Utah. Dr. Akhtar is with the Department of Dermatology at Cleveland Clinic Florida in Weston Florida. Dr. Secrest is additionally with the Department of Population Health Sciences at the University of Utah in Salt Lake City, Utah and the Department of Dermatology, Te Whatu Ora–Waitaha Canterbury (Health New Zealand), in Christchurch, New Zealand.

FUNDING: No funding was provided for this article.

DISCLOSURES: The authors report no conflicts of interest relevant to the content of this article.

ABSTRACT: Background. Adequate methods reporting in observational and trial literature is critical to interpretation and implementation.

Objective. Evaluate methodology reporting adherence in the dermatology literature and compare this to internal medicine (IM) literature.

Methods: We performed a cross-sectional review of randomly-selected dermatology and IM manuscripts published between 2014-2018. Observational and trial articles were retrieved from PubMed. The primary outcome was percent adherence to STROBE or CONSORT methods-related checklist items (methods reporting score, MRS). Secondary outcomes included the relationship between methods section length (MSL) and MRS. We additionally compared these with IM literature. MRS and MSL were compared by overall article length, checklist type, field, journal, study topic, and funding source. Comparisons were assessed using univariable and multivariable linear regression.

Results. We identified 389 articles (172 dermatology and 217 IM). Within dermatology, we identified 83 clinical trials and 89 observational studies. Mean MRS was 61.4 percent. A one word increase in MSL corresponded to a 0.02 percent increase MRS (β=0.02, 95% CI 0.01-0.03). Mean MRS was 12.8 percent lower in the dermatology literature compared with IM (β=-12.8%, -15.6-[-9.91]). Mean dermatology MSL was 345 words shorter (β=-345, -413-[-277]). Studies from JAMA Dermatology, Journal of Investigative Dermatology, and British Journal of Dermatology, with government funding, and having supplemental methods had higher mean MRS’s.

Conclusion. Methods reporting quality was low in dermatology. A weak relationship between MRS and MSL was observed. These data support enhancing researcher emphasis on methods reporting, editorial staff, and peer reviewers that more strictly enforce checklist reporting.

Keywords. Dermatology, clinical trial, observational study, epidemiology, methodology, study design, STROBE, CONSORT, internal medicine, journal

Thorough methodology reporting is critical in research appraisal and reproducibility.1–4 It is necessary for application and context, and literature reviews that contextualize risks of bias and quality of summarized results.1,4–8 Likewise, poorly presented studies may be an indicator of bias and poor methodological rigor.1,2,4,8,9

To encourage high-quality methodology reporting, the Enhancing the QUAlity and Transparency Of health Research (EQUATOR) checklists were devised, including the CONsolidated Standards of Reporting Trials (CONSORT) guidelines for clinical trials and the STrengthening the Reporting of OBservational studies in Epidemiology (STROBE) guidelines for observational research.1,8 These checklists have been adopted by journals6,10 and guide background, methodology, and results reporting.

While advice to young authors rightly emphasizes concise writing,11,12 previous reporting quality studies have hypothesized that space limitations may contribute to poor reporting.2 Our primary objective was to evaluate STROBE/CONSORT methods-based reporting in observational studies and clinical trials in the dermatology literature. Our secondary outcomes included investigating the relationship between methods section length and methods reporting quality and comparing methods reporting, methods sections length, and their relationship between internal medicine (IM) and dermatology papers.

Methods

We performed a cross-sectional assessment of randomly sampled observational and clinical trial studies in the five top-cited dermatology and IM journals. This study did not require ethics approval. The five highest impact dermatology journals at time of search were: The Journal of the American Academy of Dermatology (JAAD), JAMA Dermatology, Journal of Investigative Dermatology (JID), The British Journal of Dermatology (BJD), and The Journal of the European Academy of Dermatology and Venereology (JEADV) (See Supplemental Material). The five highest cited IM journals were: The New England Journal of Medicine (NEJM), JAMA Internal Medicine (JAMA IM), Annals of Internal Medicine (AIM), Journal of Internal Medicine (JIM), and The European Journal of Internal Medicine (EJIM).

Eligibility criteria. We included any original study of observational or clinical trial study published in English between 1/1/2014 and 12/31/2018. Reviews, meta-analyses, systematic reviews, brief reports, letters, trial protocols, secondary analyses of trials, case reports, and case series’ were excluded. Specialized studies such as genetic association studies or N of 1 trials were not included as these have specific checklists/extensions.13,14 Studies in animals, ex vivo, or basic science focused were excluded. For NEJM, studies needed to be internal medicine-focused.

Literature search and identification of eligible studies. Given the quantity of available literature over this time frame, a random sample was collected for each journal and year. To balance literature representation, statistical power, and person-hour feasibility, we desired 10 studies per year per journal for a total of 500 studies. To account for ineligible studies, we planned to identify 15 studies per year per journal. Potential studies were randomly selected from a PubMed query by associating the search result number with a generated random number (See Supplemental Material).

Data extraction. Information extracted included year of publication, journal, field (dermatology vs. internal medicine), journal word limit (as of 2021), PubMed identification number, study type, study topic, funding source, and use of supplemental materials. Section lengths, including overall, introduction, methods, results, and discussion, were calculated (See Supplemental Material). Prior to extraction, detailed training, written instructions, and oversight of initial data extractions, was provided by author ZHH with oversight by AMS. Article information was divided and retrieved independently by authors ZHH, JF, ZF, and RC.

Methods reporting scoring. Methods reporting scores were derived from checklist items contained in the CONSORT 2010 checklist9 for clinical trials and the STROBE 2007 checklist8 for observational studies. Checklists were composed of items related to methodology reporting as determined by group consensus. Checklists were customized for each study type (i.e. cross-sectional, cohort, randomized trial, blinded randomized trial, etc) by including only methods reporting items which correspond to that study type. Since prior studies varied in how to code multi-part questions (2a, 2b, 2c),2,15,16 we split these into individual questions to improve granularity and interpretation of missing components. Each finalized checklist is provided in the Supplemental Material. A similar training process occurred prior to article grading and was overseen by ZHH. Article grading was performed by authors ZHH, JF, OD, RC, and NS. During grading, previously extracted data was assessed for accuracy. Authors ZHH, OD, and KB performed quality checks of final data to confirm accuracy and close grading agreement. Article supplements related to paper methods were included for methods reporting score but not section lengths. In unclear cases or cases of disagreement in grading scores, cases were discussed with authors ZHH and AMS until group consensus was reached.

Outcomes. Proportion of appropriate checklist items reported ([# of items reported/# of total checklist items] *100) was the primary outcome. The main secondary outcome was the association between reporting quality and methods section length. These two outcomes were compared between the dermatology and IM literature to offer context and possibilities for improvement. Other secondary outcomes included association between methods reporting score and methods section lengths between key study characteristics such as journal, study type, funding type, and checklist used (a surrogate for observational versus trial-based literature).

Statistical analysis. Descriptive summary statistics were performed for all extracted variables using frequency (%) for categorical variables and mean (standard deviation [SD]) for continuous variables. Initial comparisons of study characteristics between dermatology and IM studies were performed with Pearson’s chi-square test for categorical variables and Student’s t-test for continuous variables.

Dermatology literature analysis. Linear regression was used to compare methods reporting scores and section lengths. We used univariable models to assess differences in reporting score between journal, year, study type, study topic, funding type, presence of supplemental materials, and quality reporting type (CONSORT versus STROBE). We used multivariable linear regression models to evaluate the adjusted relationship between methods reporting scores and methods section length and between field comparisons. We adjusted for overall article length, journal, reporting form used (STROBE versus CONSORT), study topic, and funding source. We also included an interaction term between methods section length and reporting form to evaluate if the relationship between methods reporting score and methods section length is different for CONSORT versus STROBE-based analyses. For details regarding confounding selection and model equations see Supplement.

Comparisons with internal medicine literature. Multiple linear regression was used to compare average methods section lengths and quality reporting scores between fields. We adjusted for the same confounders as those adjusted for in our dermatology-specific comparisons. We also used an interaction term between field and methods section length to test if the relationship between methods section length and methods reporting varied by field. Lastly, we compared methods sections lengths between fields adjusted these for the same confounders. See Supplement for details and regression equations.

Linear regression coefficients are portrayed by “β.” All p-values were two-sided, and all analyses were performed using Stata v14.2 (StataCorp LLC, College Station, TX).

Results

Study characteristics. We identified 389 studies meeting criteria, including 217 IM articles and 172 dermatology articles published between 1/1/2014 and 12/31/2018. Study characteristics are shown in Table 1. Similar numbers of studies were found and met inclusion criteria for all dermatology journals except JID (n=24). Number of studies was uniform by year and by checklist type (STROBE = 89, 51.7%; CONSORT = 83, 48.3%). Journal word count requirements differed numerically between the two groups; 33.8 percent of IM papers came from journals allowing for 4,000-5,000 words. The highest word limit offered by a dermatology journal was 3,500 words. The dermatology journal with the lowest word limit, JAAD, limits original articles to 2,500 words (20.9% of articles). The lowest word count requirement in the IM literature was 2,700 words (NEJM, 23.0% of articles).

Average methods reporting score was lower in dermatology (61.4% [±16.0%]) than in IM (74.2% [±12.6%], p<0.0001). Dermatology averaged longer introductions and discussions, but shorter methods and results sections (Table 1). Average dermatology methods sections, as a percent of total paper size, were shorter (25.5% [±9.6%] vs. 33.3% [±9.5%]; p<0.0001).

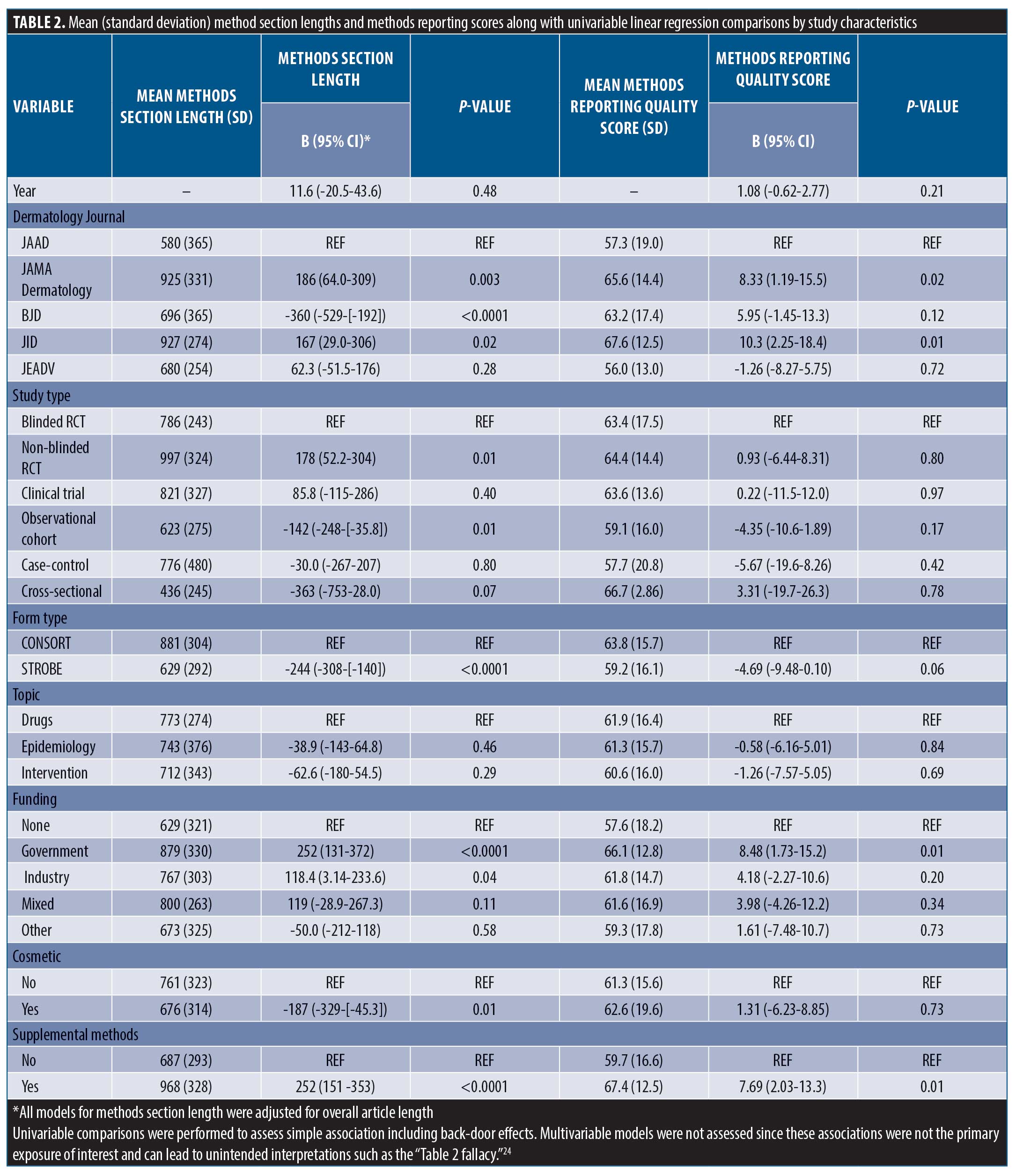

Methods reporting score, methods section length, and association between methods length and methods reporting in dermatology. Differences between mean methods section lengths, method reporting scores, and results of univariable linear regression models within dermatology are shown in Table 2. The average reporting score for observational studies (STROBE) was 59.2 percent (±16.1%) compared with 63.8 percent (±15.7) for trials (CONSORT) (Contrast = -4.69, -9.48-0.10, p=0.06). We found insufficient evidence for a change in reporting score over time (β=1.08, -0.62-2.77). JAMA Dermatology and JID scored the highest and had higher reporting than JAAD and JEADV (p<0.05 for all). Method reporting scores were, on average, 8.48 percent higher than unfunded studies (β=8.48, 1.73-15.2) and articles with supplemental methods-related materials scored, on average, 7.69 percent higher than those without these materials (β=7.69, 2.03-13.3).

After adjusting for overall paper length, JAMA Dermatology and JID had the longest methods sections (p<0.05 for all pairwise comparisons). Compared with clinical trials, observational studies tended to have shorter methods sections (629 [±292] vs. 881 [±304]) and government-funded studies tended to have longer methods sections compared to unfunded studies (879 [±330] vs. 629 [±321]). Articles referencing supplemental methods documents averaged 252 word longer methods sections (β=252, 151-353).

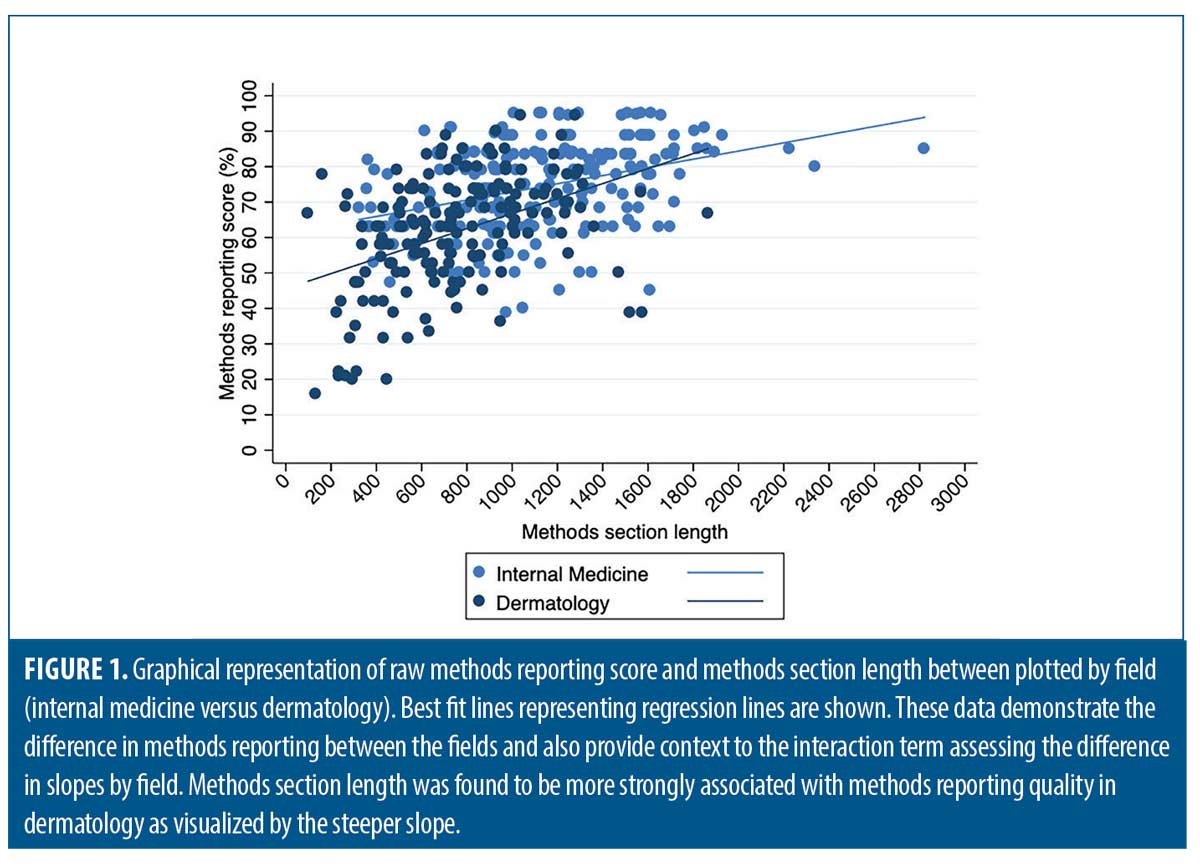

After adjusting for overall paper length, a single word increase in methods section length corresponded with a methods reporting score increase of 0.02 percent (β=0.02, 0.01-0.03). This was largely unchanged after adjusting for journal, checklist type, study topic, and funding sources (β=0.01, 0.005-0.02). The interaction term between methods section length and checklist (STROBE versus CONSORT) was statistically significant (β=-0.02, -0.03-[-0.005). The correlation between methods reporting quality and methods section length was r=0.43 and between methods section length as a percent of total paper length was r=0.23.

Comparisons between dermatology and internal medicine literature.

Dermatology methods reporting scores were, on average, 12.8 percent lower than IM scores (β=-12.8, -15.6-[-9.91]). On average, dermatology method sections, after accounting for overall paper length, were 345 words shorter (β=-345, -413-[-277])).

After adjusting for checklist type (STROBE versus CONSORT), study topic, and funding source, the average difference in methods section reporting scores between dermatology and medicine decreased (10.7 %), but remained statistically significant (β=-10.7, -13.6-[-7.73]). After adding methods section length and overall article length to the model, the difference was reduced further but remained significant (β=-7.30, -10.3-[-4.30]). The interaction term between methods section length and field was significant and is shown graphically in Figure 1 (β=0.01, 0.001-0.02). Dermatology methods section lengths averaged 257 words shorter than IM articles after adjusting for checklist type, study topic, funding source, and overall article length (β=-257, -321-[-192]).

Findings from methods reporting checklists. Findings from STROBE and CONSORT methods reporting-related checklists in the dermatology literature are reported in Table 3. In the cohort studies group, frequently non-reported items (not reported in ≥ 30% of studies) included methods for participant selection, how follow-up was determined, defining of confounders, discussion of how to address bias, how sample size was derived, reasoning for categorizing continuous variables, and methods for addressing confounding discussed. Among randomized, and blinded, randomized trials, frequently non-reported items included discussion of changes to protocol after commencement of study (including if none occurred), reasoning for protocol changes given, trial registration documentation provided, settings where data was collected, sample size determination, method used to implement, steps taken to conceal, and who generated/oversaw random allocation sequencing, who enrolled participants to interventions, who assigned participants to interventions, description of who was blinded to interventions, and process for blinding described.

Discussion

The average methodology reporting score in top-cited dermatology literature was low (61.4%). This was similar between observational and trial literature (59.2% vs. 63.8%, respectively). We found a small relationship between methods section length and methods reporting score. This roughly equated to a 0.4 percent increase in reporting score per 20-word sentence. This change may be more important in observational trials where larger changes in quality per increase in methods section length was seen. However, other differences, such as those seen between journals, articles with methods-based supplemental documents, and government funding sources, were more pronounced.

The average methodology reporting score was lower in dermatology than in IM (61.4% vs. 74.2%). This difference persisted after adjusting for confounding and was further attenuated, but not eliminated, when adjusting for methods section length and overall paper length. Likewise, the relationship between methods section length and reporting quality was not as pronounced in the IM literature. This supports our finding that methods section length likely only affects methods reporting to a certain extent, but other factors are more important. It also suggests that reporting in dermatology varies significantly from IM and collaborative comparisons regarding research, editorial, and peer review processes across fields may be valuable.

Multiple studies have evaluated CONSORT reporting in dermatology clinical trials.2,16,17 These have shown improvement in at least some key aspects of trial reporting over time. Compared with a 2019 study evaluating COSORT item reporting in randomized controlled trials (RCTs) from 2015-2017,2 we saw higher rates of studies reporting methods for random allocation sequence; however, we also found that steps taken to conceal the sequence, a critical component of gauging randomization, remained infrequently reported. Likewise, methodology surrounding the blinding process was frequently not reported. Alarmingly, we also saw low rates of providing trial registration information (clinical trial = 4/9, non-blinded randomized trial = 26/36, and blinded, randomized trial = 25/38). Pre-registration is critical for protecting against selective outcome reporting bias and improving transparency of trial methodology.4,7 For this simple item, we recommend journals require documentation of registration and display this information in the abstract as it is done in several IM journals (NEJM, JAMA IM, AIM). While improvement in some areas has occurred, reporting quality needs to improve since deficiencies in reporting these items have been associated with biased results and obscured interpretation.1,5,18

Fewer studies have evaluated compliance to STROBE reporting guidelines.15 While methods reporting scores were similar between STROBE and CONSORT forms, STROBE-based scores were more closely associated with methods section length, suggesting a potentially unique lack of detail. Discussion of confounding, model building, participant selection and data sources are critical to proper interpretation and assessment of observational data and were frequently not reported.4,6,8,15,19–21 Similar to a 2010 study, we found low levels of reporting sample size discussion (41, 50.6%) and handling of missing data (8, 9.9%) [data from cohort studies]. For reporting of follow-up, we saw mixed results. We did see improvement to discussions surrounding losses to follow-up (72, 88.9%), but reporting of how follow-up was measured, including establishing time zero, remained low (39, 48.1%). Notably, we did see improvement in reporting statistical methods for evaluating outcomes (68, 84.0% versus 10-18%).(15) In general, compliance to checklist requirements remains unacceptably low in observational literature and must improve.

One interesting finding was the association between supplemental materials and higher reporting scores. Studies with supplemental methodology materials unintuitively had longer mean methods sections. This may suggest fundamental differences in the types of researchers or research groups providing supplemental materials, or the editorial practice of different journals rather than a tool to abbreviate the methods section. For example, for clinical trials the practice of protocol and statistical plan publication varies by journal. JAAD notes supplemental files are for review only and not for publication; JAMA Dermatology states these supplements will be published.4 This may explain why we saw no instances of supplemental methods documents in JAAD articles but 24 in JAMA Dermatology (63.2% of total articles) and 12 in JID (48.0% of total articles). Since supplemental materials don’t add to overall length, can easily be provided in electronic format, supply critical details regarding analysis and study plan, and are often recommended or required for peer and editorial review, we recommend journals encourage supplemental materials publish these when available.

A study by the Radiation Therapy Oncology Group found that the methodological rigor of trials was, on average, better than that reported in trial manuscripts.22 However, proper methods reporting is still critical to understanding the viability, context, application, meta-analysis, and reproducibility of results.1,4,5,8 While avoiding overly restrictive word counts may help, emphasizing abbreviated introductions and discussions to more fully report methods and results may be a more practical approach. Likewise, we recommend encouraging detailed methodology reporting in supplemental materials if required. Stricter adherence to reporting checklists both by editorial staff and peer reviewers will be important to driving improvement. Lastly, improved education of clinicians and researchers regarding the importance of methodology reporting could improve reporting and research literature consumption.

Limitations. One limitation to this study is the scope of journals reviewed. Dermatologic research is published in non-dermatology journals, and many dermatology-specific journals exist. However, we believe the top-cited journals included offer a good representation of what dermatologists are reading and using to guide practice.

We could not evaluate for quality of reported items (i.e., if statistical methods provided are appropriate). This requires a more nuanced, and subjective evaluation, and fewer formal, consensus-based, guidelines exist. Thus, this study pertains to methods reporting quality and not necessarily the methodological quality of the papers.

Lastly, the definition of whether some items were present, or fully present, was necessarily subjective in some cases. We attempted to mitigate bias by rigorous training, oversight, and quality control review, but the possibility for misclassification or bias persists. We did seek to improve on this from previous analyses2,9,15,23 by separating multi-part items into single questions to simplify grading and interpretation.

Conclusion

Despite journal recommendations for checklist adherence, reporting of key CONSORT and STROBE items remains inadequate. While we did find a statistical relationship between method section length and reporting quality, this relationship was small. Thus, we believe that improved emphasis on methods and results sections, improved physician and clinician education, and stricter enforcement of checklist reporting by editorial staff and peer reviewers is critical for improving methodology reporting.

Supplemental Material

https://bwcbuildout.com/jcad/wp-content/uploads/Supplemental-Materials.pdf

STROBE Checklist

https://bwcbuildout.com/jcad/wp-content/uploads/STROBE-Checklist.pdf

References

- Moher D, Hopewell S, Schulz KF, et al. CONSORT 2010 Explanation and Elaboration: Updated guidelines for reporting parallel group randomised trials. J Clin Epidemiol. 2010 Aug;63(8):e1–37.

- Kim DY, Park HS, Cho S, et al. The quality of reporting randomized controlled trials in the dermatology literature in an era where the CONSORT statement is a standard. Br J Dermatol. 2019 Jun;180(6):1361–1367.

- Langan S, Schmitt J, Coenraads PJ, et al. The reporting of observational research studies in dermatology journals: A literature-based study. Arch Dermatol. 2010;146(5):534–541.

- Anderson JM, Niemann A, Johnson AL, et al. Transparent, Reproducible, and Open Science Practices of Published Literature in Dermatology Journals: Cross-Sectional Analysis. JMIR Dermatol. 2019 Nov 7;2(1):e16078.

- Jüni P, Altman DG, Egger M. Systematic reviews in health care: Assessing the quality of controlled clinical trials. BMJ. 2001 Jul 7;323(7303):42–46.

- Langan SM, Schmitt J, Coenraads PJ, et al. STROBE and reporting observational studies in dermatology. Br J Dermatol. 2011 Jan;164(1):1–3.

- Schulz KF, Altman DG, Moher D, CONSORT Group. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMJ. 2010 Mar 23;340:c332.

- Vandenbroucke JP, von Elm E, Altman DG, et al. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): explanation and elaboration. PLoS Med. 2007 Oct 16;4(10):e297.

- Alvarez F, Meyer N, Gourraud PA, et al. CONSORT adoption and quality of reporting of randomized controlled trials: a systematic analysis in two dermatology journals. Br J Dermatol. 2009 Nov;161(5):1159–1165.

- Weinstock MA. The JAAD adopts the CONSORT statement. J Am Acad Dermatol. 1999 Dec;41(6):1045–1047.

- Johnson TM. Tips on how to write a paper. J Am Acad Dermatol. 2008 Dec;59(6):1064–1069.

- Elston DM. Writing a better research paper: Advice for young authors. J Am Acad Dermatol. 2019;80(2):379.

- Little J, Higgins JPT, Ioannidis JPA, et al. STrengthening the REporting of Genetic Association Studies (STREGA)–an extension of the STROBE statement. Genet Epidemiol. 2009 Nov;33(7):581–598.

- Vohra S, Shamseer L, Sampson M, et al. CONSORT extension for reporting N-of-1 trials (CENT) 2015 Statement. BMJ. 2015 May 14;350(may14 17):h1738–h1738.

- Langan S, Schmitt J, Coenraads PJ, et al. The reporting of observational research studies in dermatology journals: a literature-based study. Arch Dermatol. 2010 May;146(5):534–541.

- Alam M, Rauf M, Ali S, et al. A Systematic Review of Completeness of Reporting in Randomized Controlled Trials in Dermatologic Surgery: Adherence to CONSORT 2010 Recommendations. Dermatol Surg Off Publ Am Soc Dermatol Surg Al. 2016 Dec;42(12):1325–1334.

- Adetugbo K, Williams H. How well are randomized controlled trials reported in the dermatology literature? Arch Dermatol. 2000 Mar;136(3):381–385.

- Pildal J, Hróbjartsson A, Jørgensen KJ, et al. Impact of allocation concealment on conclusions drawn from meta-analyses of randomized trials. Int J Epidemiol. 2007 Aug;36(4):847–857.

- Altman DG, Royston P. The cost of dichotomising continuous variables. BMJ. 2006 May 6;332(7549):1080.1.

- Smith G. Step away from stepwise. J Big Data. 2018 Dec;5(1):32.

- Greenland S. Modeling and variable selection in epidemiologic analysis. Am J Public Health. 1989 Mar;79(3):340–349.

- Soares HP, Daniels S, Kumar A, et al. Bad reporting does not mean bad methods for randomised trials: observational study of randomised controlled trials performed by the Radiation Therapy Oncology Group. BMJ. 2004 Jan 3;328(7430):22–24.

- Nankervis H, Baibergenova A, Williams HC, et al. Prospective registration and outcome-reporting bias in randomized controlled trials of eczema treatments: a systematic review. J Invest Dermatol. 2012 Dec;132(12):2727–2734.

- Westreich D, Greenland S. The Table 2 Fallacy: Presenting and Interpreting Confounder and Modifier Coefficients. Am J Epidemiol. 2013 Feb 15;177(4):292–298.